Introduction

Kubernetes has become the backbone of modern application deployment, offering a robust way to manage containerized workloads. But what makes Kubernetes so effective? It all starts with understanding its basic components and architecture.In this blog, I’ll guide you through the essential building blocks of Kubernetes and explain how they interact to create a powerful and scalable system. By the end, you’ll have a clear picture of how Kubernetes orchestrates containers to ensure high availability and seamless scaling. Let’s dive in and unravel the fundamentals of Kubernetes together!

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates various tasks involved in deploying, managing, and scaling containerized applications. Initially developed by Google, Kubernetes is now maintained by the Cloud Native Computing Foundation (CNCF).

Why Kubernetes?

Kubernetes is not just another buzzword in the world of cloud and containers; it has fundamentally transformed the way modern applications are deployed and managed. Here's why it stands out:

Streamlined Container Management

Managing containers individually can quickly become complex as the number of services grows. Kubernetes provides a unified platform to deploy, scale, and maintain containers effortlessly, ensuring consistency across development and production environments.Robust Scalability and Performance

Kubernetes allows applications to scale seamlessly to handle increased demand, ensuring optimal performance. Its autoscaling features mean that you only use the resources you need, which can lead to significant cost savings.Enhanced Resilience and Self-Healing

Kubernetes actively monitors the health of your applications and automatically replaces failed containers or restarts them if something goes wrong. This minimizes downtime and ensures high availability, even during unexpected failures.

Kubernetes Architecture

we’ll dive into Kubernetes architecture. Understanding the architecture is crucial to grasp how Kubernetes efficiently manages containerized applications.

Master Node

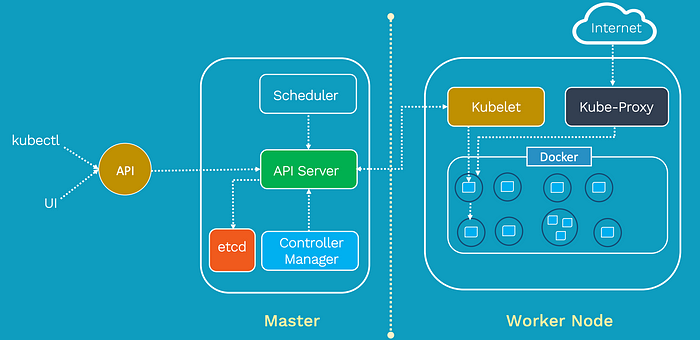

The heart of a Kubernetes cluster is the Master Node. It acts as the control plane for the entire cluster and manages the overall cluster state. The Master Node is responsible for making global decisions about the cluster, such as scheduling new pods, monitoring the health of nodes and pods, and scaling applications based on demand.

The Master Node consists of several key components:

API Server (kube-apiserver):

Acts as the entry point for all administrative tasks in a Kubernetes cluster. It processes RESTful requests from users, tools, and other components and updates the desired state of the cluster.

Controller Manager (kube-controller-manager):

Handles cluster-level control loops such as node management, replication, and endpoint management. It ensures that the cluster's current state matches the desired state.

Scheduler (kube-scheduler):

Assigns newly created pods to nodes based on resource requirements, policies, and availability. It ensures optimal utilization of resources.

etcd:

It is a distributed key-value store that stores the configuration data of the cluster. All information about the cluster’s state is stored here.

Worker Nodes:

The Worker Nodes are the machines where containers (pods) are scheduled and run. They form the data plane of the cluster, executing the actual workloads. Each Worker Node runs several key components:

Kubelet: The Kubelet is the agent that runs on each Worker Node and communicates with the Master Node. It ensures that the containers described in the pod specifications are running and healthy.

Container Runtime: Kubernetes supports multiple container runtimes, such as Docker or containerd. The Container Runtime is responsible for pulling container images and running containers on the Worker Nodes.

Kube Proxy: Kube Proxy is responsible for network communication within the cluster. It manages the network routing for services and performs load balancing.

Kubernetes Objects and Resources

Kubernetes operates around various objects that define the desired state of your system. These objects are crucial for ensuring that the desired state is achieved and maintained over time. Below are some of the key objects in Kubernetes:

Pods:

A Pod is the smallest and most basic unit in Kubernetes. It encapsulates one or more containers, storage resources, and a unique network IP.

Pods run on worker nodes and are used to host application containers.

A pod's containers share the same network namespace, which means they can communicate with each other using localhost.

ReplicaSets:

A ReplicaSet ensures that a specified number of replicas (identical copies) of a pod are running at any given time.

It automatically adjusts the number of pod replicas based on the desired state and current system conditions (such as failures).

ReplicaSets are often managed by Deployments to ensure smooth updates and scaling.

Deployments:

![Kubernetes] Deployment Overview | 小信豬的原始部落](https://godleon.github.io/blog/images/kubernetes/k8s-deployment.png)

Deployments manage the lifecycle of ReplicaSets and provide declarative updates for applications.

They allow you to define a desired state for your pods, including the number of replicas, pod versions, and update strategies.

Deployments make it easy to roll back to a previous state in case of a failure and can handle rolling updates to minimize downtime.

Services:

A Service in Kubernetes is an abstraction that defines a logical set of Pods and provides a stable endpoint for accessing them. It enables communication between Pods, both within the cluster and externally, by exposing them through a single DNS name or IP address.

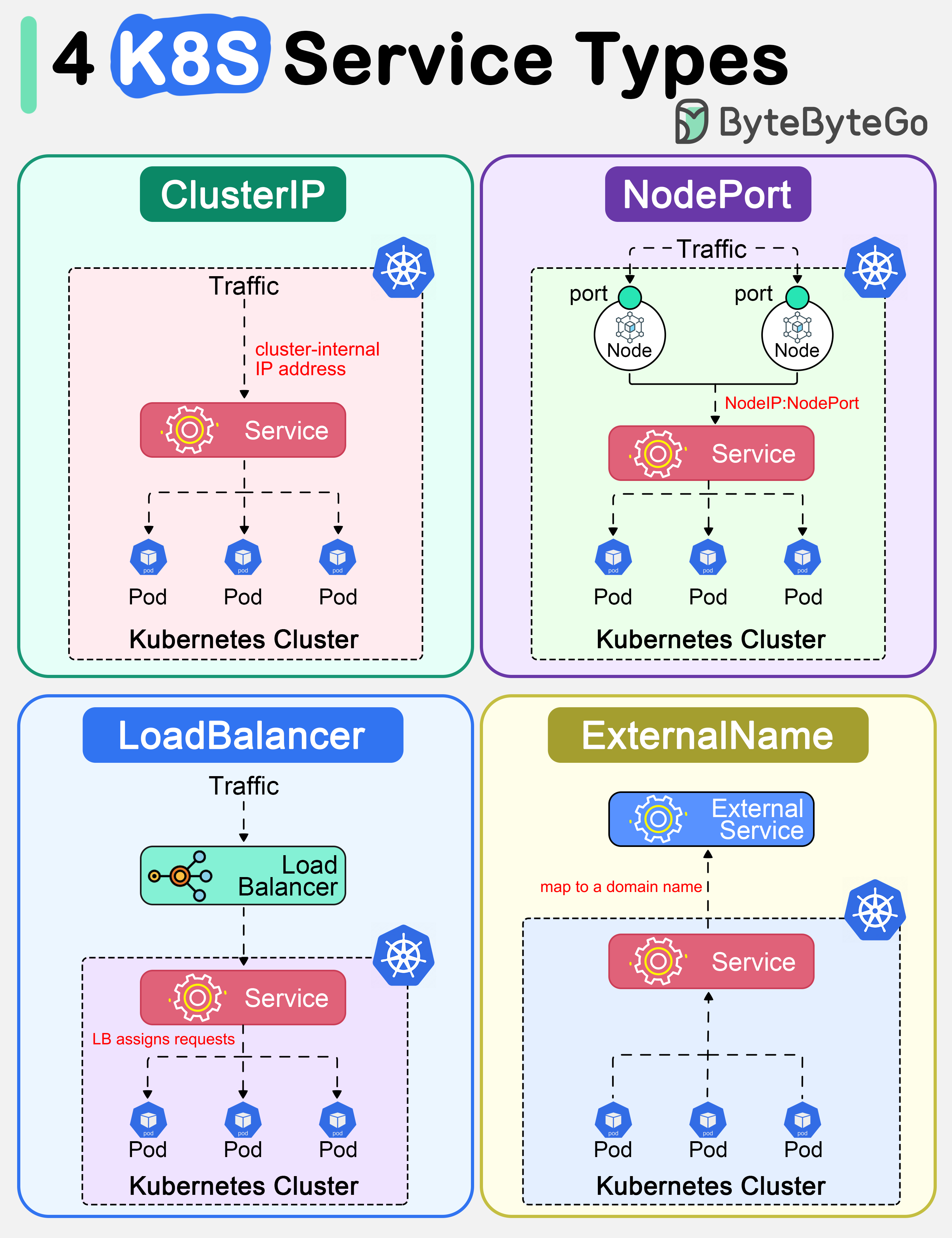

Kubernetes supports several types of Services:

ClusterIP: Exposes the service on an internal IP within the cluster, making it accessible only to other services and Pods in the cluster.

NodePort: Exposes the service on a static port on each node’s IP, allowing external access to the service through

<NodeIP>:<NodePort>.LoadBalancer: Creates an external load balancer (usually via a cloud provider), providing a single entry point to access the service from outside the cluster.

ExternalName: Maps the service to an external DNS name, allowing access to an external resource as if it were a service within the cluster.

Why Learn Kubernetes?

Kubernetes offers several key benefits that make it a powerful platform for managing modern applications. Scalability is one of its core features, as it automatically adjusts the number of running application instances based on real-time demand, ensuring optimal resource utilization. With Kubernetes, applications can run consistently across different environments, providing portability that abstracts away the underlying infrastructure differences and simplifies deployment across on-premises, private cloud, or public cloud setups. Resilience is another advantage, as Kubernetes ensures high availability by automatically recovering from failures, rescheduling pods, and maintaining uptime without manual intervention. Lastly, Kubernetes enables ecosystem integration, seamlessly working with CI/CD tools, cloud providers, and monitoring systems, making it an essential component of modern DevOps workflows for automated deployments and continuous monitoring.

How I Started Practicing Kubernetes on My Local Machine:

When I first decided to dive into Kubernetes, I wanted a simple, hands-on way to learn without relying on cloud infrastructure. After some research, I found that Minikube was an ideal choice for running a local Kubernetes cluster. Minikube allows you to create a single-node Kubernetes cluster on your local machine, making it perfect for beginners like me.

I started by downloading Minikube and running it inside a Docker container on my local machine. This setup allowed me to simulate a Kubernetes cluster without needing complex infrastructure. Once I had Minikube running, I was able to deploy and manage Pods, Services, and explore other Kubernetes features. By experimenting with these components in a local environment, I gradually built a better understanding of how Kubernetes operates, step by step.

Conclusion:

Kubernetes may seem complex at first, but its architecture is logical and modular, making it approachable for beginners. By understanding the roles of the master and worker nodes, along with core components like Pods, Services, and Ingress, you’ll be well on your way to mastering Kubernetes. For me, getting hands-on with Kubernetes by setting it up on my local machine through Minikube was the perfect starting point. By running a local Kubernetes cluster inside Docker, I was able to experiment freely, gain practical experience, and learn step by step.

Happy learning!